Russ Allbery: On time management

Last December, the Guardian published a long essay by Oliver

Burkeman entitled

"Why time management is ruining our lives". Those who follow my book

reviews know I read a lot of time management books, so of course I

couldn't resist this. And, possibly surprisingly, not to disagree with

it. It's an excellent essay, and well worth your time.

Burkeman starts by talking about Inbox Zero:

If all this fervour seems extreme Inbox Zero was just a set of technical instructions for handling email, after all this was because email had become far more than a technical problem. It functioned as a kind of infinite to-do list, to which anyone on the planet could add anything at will.This is, as Burkeman develops in the essay, an important critique of time management techniques in general, not just Inbox Zero: perhaps you can become moderately more efficient, but what are you becoming more efficient at doing, and why does it matter? If there were a finite amount of things that you had to accomplish, with leisure the reward at the end of the fixed task list, doing those things more efficiently makes perfect sense. But this is not the case in most modern life. Instead, we live in a world governed by Parkinson's Law: "Work expands to fill the time available for its completion." Worse, we live in a world where the typical employer takes Parkinson's Law, not as a statement on the nature of ever-expanding to-do lists, but a challenge to compress the time made available for a task to try to force the work to happen faster. Burkeman goes farther into the politics, pointing out that a cui bono analysis of time management suggests that we're all being played by capitalist employers. I wholeheartedly agree, but that's worth a separate discussion; for those who want to explore that angle, David Graeber's Debt and John Kenneth Galbraith's The Affluent Society are worth your time. What I want to write about here is why I still read (and recommend) time management literature, and how my thinking on it has changed. I started in the same place that most people probably do: I had a bunch of work to juggle, I felt I was making insufficient forward progress on it, and I felt my day contained a lot of slack that could be put to better use. The alluring promise of time management is that these problems can be resolved with more organization and some focus techniques. And there is a huge surge of energy that comes with adopting a new system and watching it work, since the good ones build psychological payoff into the tracking mechanism. Starting a new time management system is fun! Finishing things is fun! I then ran into the same problem that I think most people do: after that initial surge of enthusiasm, I had lists, systems, techniques, data on where my time was going, and a far more organized intake process. But I didn't feel more comfortable with how I was spending my time, I didn't have more leisure time, and I didn't feel happier. Often the opposite: time management systems will often force you to notice all the things you want to do and how slow your progress is towards accomplishing any of them. This is my fundamental disagreement with Getting Things Done (GTD): David Allen firmly believes that the act of recording everything that is nagging at you to be done relieves the brain of draining background processing loops and frees you to be more productive. He argues for this quite persuasively; as you can see from my review, I liked his book a great deal, and used his system for some time. But, at least for me, this does not work. Instead, having a complete list of goals towards which I am making slow or no progress is profoundly discouraging and depressing. The process of maintaining and dwelling on that list while watching it constantly grow was awful, quite a bit worse psychologically than having no time management system at all. Mark Forster is the time management author who speaks the best to me, and one of the points he makes is that time management is the wrong framing. You're not going to somehow generate more time, and you're usually not managing minutes and seconds. A better framing is task management, or commitment management: the goal of the system is to manage what you mentally commit to accomplishing, usually by restricting that list to something far shorter than you would come up with otherwise. How, in other words, to limit your focus to a small enough set of goals that you can make meaningful progress instead of thrashing. That, for me, is now the merit and appeal of time (or task) management systems: how do I sort through all the incoming noise, distractions, requests, desires, and compelling ideas that life throws at me and figure out which of them are worth investing time in? I also benefit from structuring that process for my peculiar psychology, in which backlogs I have to look at regularly are actively dangerous for my mental well-being. Left unchecked, I can turn even the most enjoyable hobby into an obligation and then into a source of guilt for not meeting the (entirely artificial) terms of the obligation I created, without even intending to. And here I think it has a purpose, but it's not the purpose that the time management industry is selling. If you think of time management as a way to get more things done and get more out of each moment, you're going to be disappointed (and you're probably also being taken advantage of by the people who benefit from unsustainable effort without real, unstructured leisure time). I practice Inbox Zero, but the point wasn't to be more efficient at processing my email. The point was to avoid the (for me) psychologically damaging backlog of messages while acting on the knowledge that 99% of email should go immediately into the trash with no further action. Email is an endless incoming stream of potential obligations or requests for my time (even just to read a longer message) that I should normallly reject. I also take the time to notice patterns of email that I never care about and then shut off the source or write filters to delete that email for me. I can then reserve my email time for moments of human connection, directly relevant information, or very interesting projects, and spend the time on those messages without guilt (or at least much less guilt) about ignoring everything else. Prioritization is extremely difficult, particularly once you realize that true prioritization is not about first and later, but about soon or never. The point of prioritization is not to choose what to do first, it's to choose the 5% of things that you going to do at all, convince yourself to be mentally okay with never doing the other 95% (and not lying to yourself about how there will be some future point when you'll magically have more time), and vigorously defend your focus and effort for that 5%. And, hopefully, wholeheartedly enjoy working on those things, without guilt or nagging that there's something else you should be doing instead. I still fail at this all the time. But I'm better than I used to be. For me, that mental shift was by far the hardest part. But once you've made that shift, I do think the time management world has a lot of tools and techniques to help you make more informed choices about the 5%, and to help you overcome procrastination and loss of focus on your real goals. Those real goals should include true unstructured leisure and "because I want to" projects. And hopefully, if you're in a financial position to do it, include working less on what other people want you to do and more on the things that delight you. Or at least making a well-informed strategic choice (for the sake of money or some other concrete and constantly re-evaluated reason) to sacrifice your personal goals for some temporary external ones.

Aaargh gcc 5.x You Suck

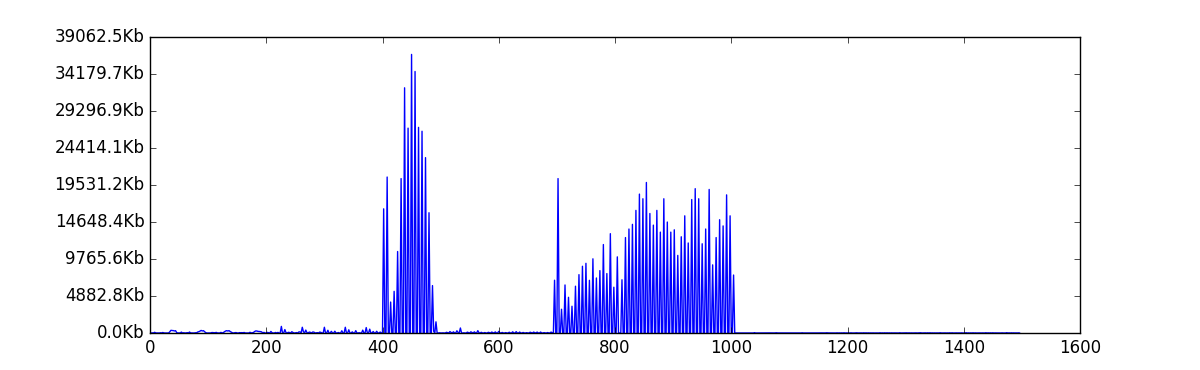

I had to write a quick program today which is going to be run many thousands of times a day so it has to run fast. I decided to do it in c++ instead of the usual perl or javascript because it seemed appropriate and I've been playing around a lot with c++ lately trying to update my knowledge of its' modern features. So 200

Aaargh gcc 5.x You Suck

I had to write a quick program today which is going to be run many thousands of times a day so it has to run fast. I decided to do it in c++ instead of the usual perl or javascript because it seemed appropriate and I've been playing around a lot with c++ lately trying to update my knowledge of its' modern features. So 200

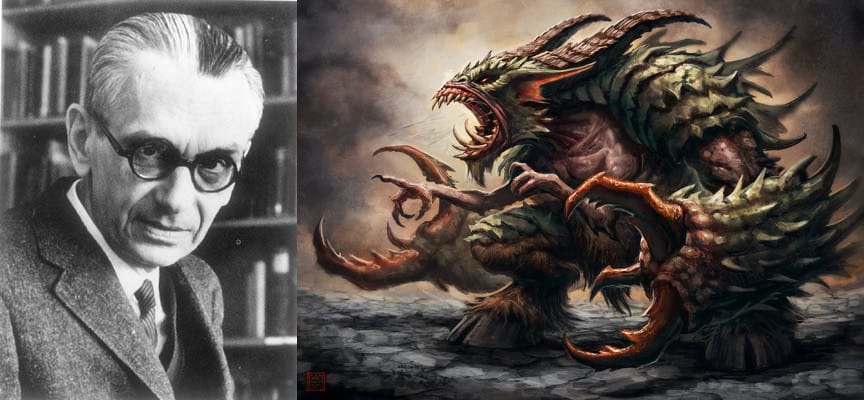

Explaining G del s theorems to students is a pain. Period. How can those poor creatures crank their mind around a Completeness and an Incompleteness Proof I understand that. But then, there are brave souls using G del s theorems to explain the world of demons to writers, in particular to answer the question:

Explaining G del s theorems to students is a pain. Period. How can those poor creatures crank their mind around a Completeness and an Incompleteness Proof I understand that. But then, there are brave souls using G del s theorems to explain the world of demons to writers, in particular to answer the question:

Very impressive.

Found at

Very impressive.

Found at  This is the second part in a series of articles about SRE, based on the talk I gave in the

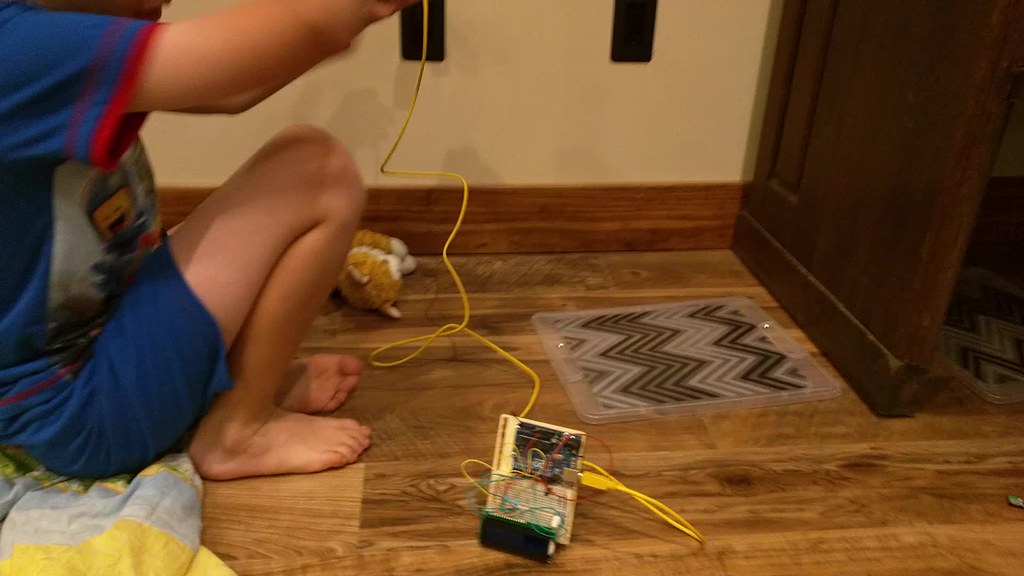

This is the second part in a series of articles about SRE, based on the talk I gave in the  I love having guests give my classes :)

This time, we had Felipe Esquivel, a good friend who had been once before invited by me to the Faculty, about two years ago. And it was due time to invite him again!

Yes, this is the same Felipe I

I love having guests give my classes :)

This time, we had Felipe Esquivel, a good friend who had been once before invited by me to the Faculty, about two years ago. And it was due time to invite him again!

Yes, this is the same Felipe I

A nice and entertaining talk. But I know you are looking for the videos! Get them, either

A nice and entertaining talk. But I know you are looking for the videos! Get them, either  I'm

I'm  This could have been a blog post about toothbrushes for monkeys, which I

seem to have dreamed about this morning. But then I was vaguely listening

to the

This could have been a blog post about toothbrushes for monkeys, which I

seem to have dreamed about this morning. But then I was vaguely listening

to the

A new version of

A new version of  During the last week I was attending the first

During the last week I was attending the first

While everybody was slapping themselves on the back about our officially confirmed contentment, the politicians tried to slip something under the radar. With elections expected in September, the press exposed a secret deal between the two biggest political parties,

While everybody was slapping themselves on the back about our officially confirmed contentment, the politicians tried to slip something under the radar. With elections expected in September, the press exposed a secret deal between the two biggest political parties,